a robotic being

you want to be with

navel is a social robot of the next generation

What makes navel special?

Through the interplay of deep tech and innovative interaction design, navel has unique social capabilities and offers a new form of user experience.

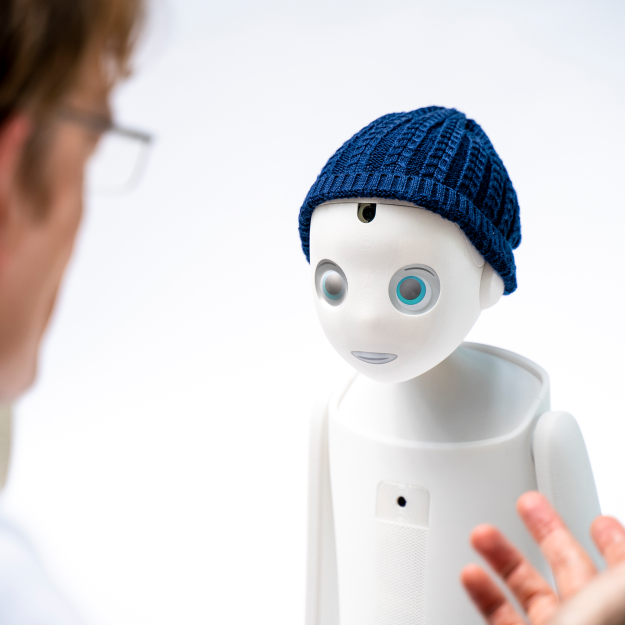

Simply enjoy the presence of the sympathetic robot figure navel and don’t worry about how navel has to be “operated”. Because navel communicates with you in a completely natural way.

We are proud to have won the Digital Health Award 2024 with navel! This underlines the revolutionary role that robotics and AI will play in the healthcare sector – especially if it can be enhanced with human warmth and empathy.

Almost 100 applicants from the digital startup environment took part. The discussions with the high-caliber jury and the Federal Ministry of Health were very helpful.

What our customers and users in care homes say about Navel

navel has been in use in several care homes in Germany since fall 2023. Both the residents and the nursing staff are delighted with navel, have a lot of fun and say things like:

- “Navel creates a good atmosphere here”

- “You can have a really good chat with him”

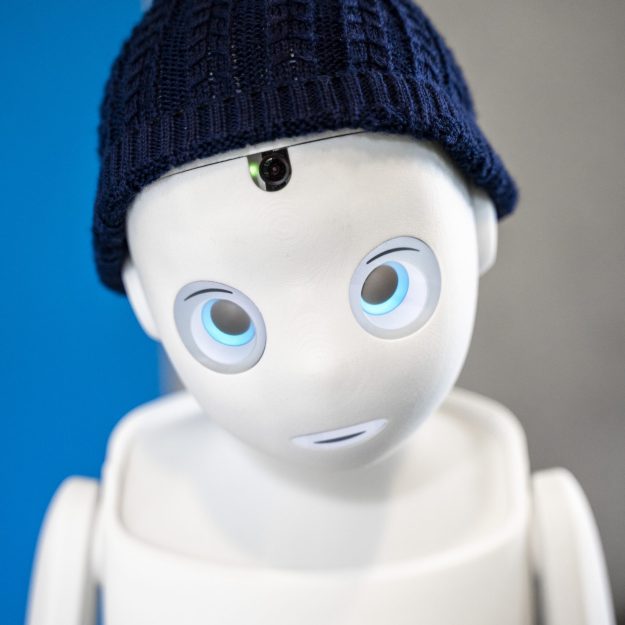

- “a funny little guy with such a nice hat and blue eyes”

- “not just a toy, but he can really do something”

The following film shows excerpts from reports on various television stations. Although the film is in German, you should be able to experience the effect of navel. Incidentally, the care homes give Navel his own names such as “Emma”, “Ricky” or “Oskar”, thus ensuring that navel is even more integrated as a friendly companion.

a single glance says

more than 1000 words

In which fields can navel be used?

As a research robot, navel enables research into new methods in social human-robot interaction.

As a care robot, navel autonomously activates people in need of care, increases their well-being and relieves caregivers.

As a service robot, e.g. in retail, navel arouses sympathy and at the same time offers helpful information and services.

This makes navel different from other social robots

navel has 10 times the computing power of comparable robots.

The latest generation of NVIDIA edge devices, which are also used for autonomous driving, run neural networks and algorithms for computer vision and sound processing in real time.

On this platform, navel with its clever software architecture can process twice as many signals at five times the rate of Pepper, for example.

The result is a very lively and unique empathic behaviour that makes navel so likeable.

Like other voice assistants, navel understands speech and has a specific, very emotional voice.

But what makes navel unique are its non-verbal capabilities: navel detects our non-verbal signals such as facial expressions or the direction of our gaze and body and in turn reacts to them with expressive facial expressions and lively gestures. Instead of an offstage voice, his words become visible with matching emotion and lip movements.

This makes communication with navel very human-like, so that anyone can interact with navel quite intuitively – in much the same way as with a small child or a pet.

Eye contact is the foundation of all social interaction, which only a few social robots besides navel have mastered.

navel has eyes that no other robot has! Special 3D optics are mounted above the displays, giving navel real three-dimensional eyeballs. Because with eyes that are only shown on a planar display, no real eye contact is possible.

Because navel uses its camera to recognise where the eyes of its conversation partner are, navel can look exactly into their eyes. And because a static stare is unpleasant, navel has natural eye movements that continuously change the focus, including gaze aversion.

Like any living creature, navel continuously detects many signals from its environment and reacts in real time. In this way, navel can also localise sources of noise, among other things, and orient itself to what is happening around it.

navel is mobile and will be able to move autonomously in the human environment. With soundless head motors, navel can move its head lively and in all directions.

navel‘s variety of mimic expressions is limitless. In addition to the basic emotions, navel can use additional grimaces and gestures and interpolate continuously between all of these. To ensure that facial expressions never remain rigid, navel shows natural fluctuations depending on the situation.

In order to be able to interact autonomously in a human environment, it needs social intelligence. navel can process the social signals such as emotion, body and gaze orientation of several people simultaneously in real time. This will allow navel to approach and address people in a situationally appropriate, autonomous and proactive way.

Navigation in the human environment must be safe and empathetic. navel will always maintain an appropriate distance and approach sensitively from the front.

navel has various room sensors so that it will find its way around even in unfamiliar spaces.

Social robots continuously collect very personal and sensitive data via cameras and microphones in order to be able to act socially intelligent.

Due to its powerful computational processor, navel can process all visual data itself in real time. Therefore, this personal data does not have to be sent to a cloud for processing, as is usually the case with other social robots. So no personal images leave navel.

In addition, the images are immediately deleted within fractions of a second after evaluation.

Use Cases

for your specific requirement

navel

care

navel has been used in several nursing homes since October 2023. Thanks to its empathic abilities and the integration of large language models, navel provides additional emotional and cognitive activation.

Find out more about navel in care here.

If you are interested in purchasing navel care, please contact us.

navel

research

Researchers and developers get full access to all navel‘s capabilities:

Python SDK to program custom behaviour with direct access to all functions

– all high- and low-level data

– video and audio streams

– all actuators (locomotion, head and arm movement, voice output, …)

Browser application to control the robot without programming

– for quick Wizard-of-Oz tests

– Basic emotions, gestures and sequences

– own libraries can be created

For questions and orders please contact us.

Technology

Software

OS

Linux

Computer Vision (10fps)

Face detection

Person identification

Emotion recognition

Head pose

Eye gaze

Sound Processing

Sound source localization

Beam forming

Natural Language Processing

STT: via cloud service e.g. Google

TTS: Acapela (30 languages)

Dialog Manager: LLM based e.g. GPT

Navigation

SLAM (in planning)

Socially aware proxemics

SDK

Python SDK

Hardware

SoC

NVIDIA® Jetson AGX Xavier™

15 GB for user data

Cameras

Head: 80° 720P 60 fps Global Shutter

Body: 160° 720P 60 fps Global Shutter

Microfones

3D array comprised of 7 microfones

3D Sensors

Intel® RealSense™ Depth Module D430

2x Lidar sensors

3x Sonar sensors

Displays

3x Round displays

3x 3d lenses

Motors

Head: 3x quiet gimbal motors

Drive: 2x 65 W motors

Shoulder: 2x Servo motors

Tilt: 2x linear motors

Speaker

2x 4 W Broadband loudspeaker

Battery

288 Wh Li-Ion Batteries

Connectivity

LAN

WLAN

Size

Height 72 cm

Weight

8 kg

Cap

Wool