This short clip shows our prototype for the visual perception pipeline we are currently developing for our social robot. The visual perception pipeline features deep neural networks optimized for inference on the NVIDIA Jetson Xavier AGX, which comprises

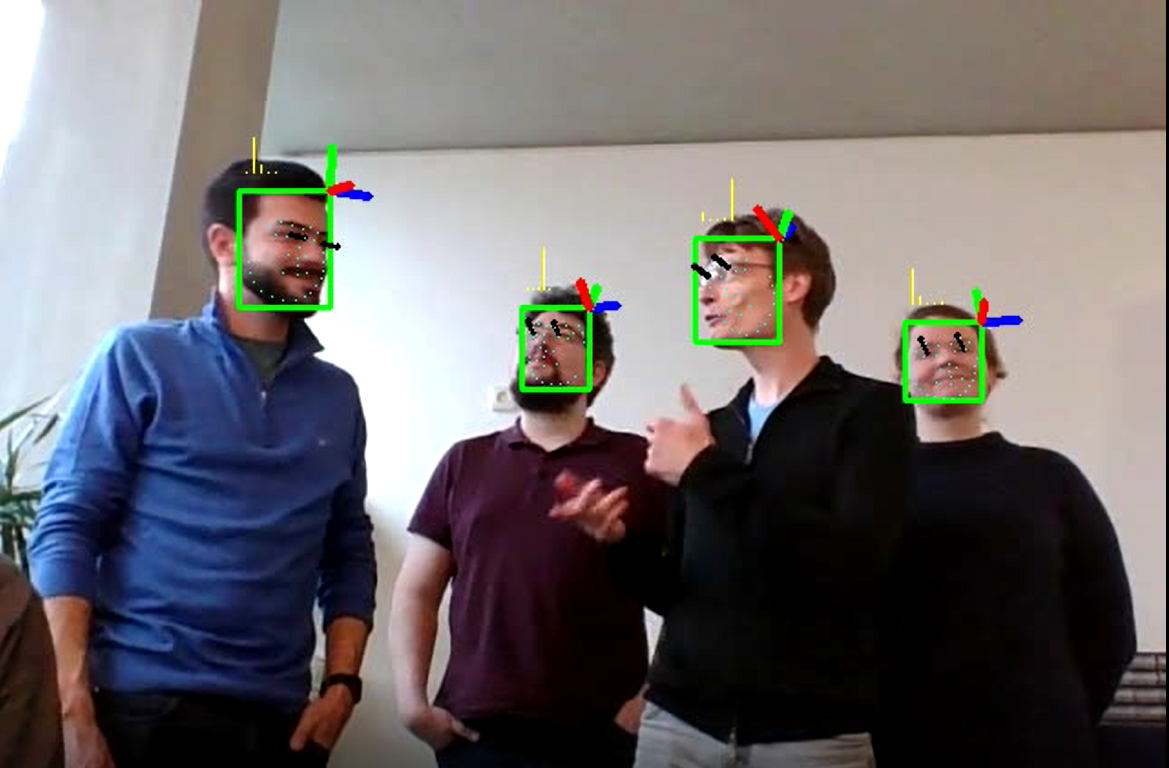

- the mtcnn face detector for face detection comprised out of 3 convolutional neural networks (CNN) (green boxes around faces),

- a small CNN for head pose estimation (coordinate systems on the top right of the green boxes),

- another CNN for facial expression recognition (yellow bars on the top left of the green boxes indicating the confidence in detecting various facial expression states hinting at 5 basic emotions),

- yet another CNN for detecting facial landmarks (turquoise points inside the green boxes)

- last but not least a final CNN for estimating the direction of gaze of a person (black arrows coming out of the eyes).

All in all we are very happy to be executing 7 neural networks cascading into one another in real-time thanks to NVIDIAs TensorRT library and NVIDIA Tensor Cores. We are able to detect various facial expressions/features of multiple people at once, which will be the foundation for our social AI. Right now we are developing our pipeline to incorporate even more networks so VIVA can be the best social robot you could ever dream of, so stay tuned!